In recent years, AI chats like ChatGPT, Gemini, and Claude have become quite popular. They have significantly changed the way people do various tasks — such as programming, doing homework, writing, etc. — for better or worse.

However, most of the popular AI models are closed: they run on closed software on a closed server — they don’t run natively on your computer. Instead, your message is sent to a server, processed there, and then the result is returned.

Nevertheless, it is quite easy to run AI models natively on your computer using Ollama, a tool written in Go for handling and running LLMs. Keep in mind, though, that without a powerful computer you won’t be able to run the best models, but you can still experiment with simpler ones.

The first step is to install Ollama. You can use the official installer, build from source, or install it via your Linux distro’s package manager. On Fedora, for example:

sudo dnf install ollama Once installed, start the Ollama server by typing:

ollama serve And to run a model, type:

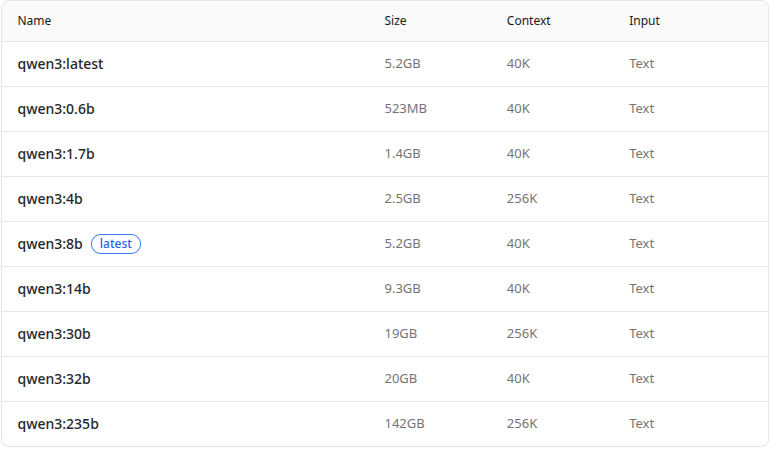

ollama run <model name> There are several options, such as DeepSeek and GPT-OSS. However, on a weaker machine without a dedicated GPU, larger models won’t run. That’s why you should look for models with fewer parameters — for example, in the Qwen3 models:

As we can see, there are many. The ‘b’ stands for billions of parameters, so qwen3:8b has 8 billion parameters, and qwen3:0.6b has 600 million parameters. Running a large model requires not only a good GPU but also a lot of VRAM. Smaller models, up to 4 billion, can be run on the CPU.

In my tests, the best models are Qwen3, Gemma3, and LLaMA 3.2. They are small but can still be useful. Other models, like Deepseek R1 in their basic versions, make many mistakes and can’t even speak Portuguese properly.

For example, to run the 1-billion-parameter Gemma3, you would do:

$ ollama run gemma3:1b

>> Hello, how are you?

Hello, I’m fine, thank you for asking! How are you doing? 😊

And you, how are you? How can I help? To list models, use ollama list, and to remove one, use ollama rm <model>.

How to Run on Your Phone

To run it on your phone, install Termux — an open-source Linux terminal for Android —, install Ollama with pkg install ollama, and follow the same steps as mentioned above.